Hadoop部署之Hadoop(三)

一、Hadoop 介紹

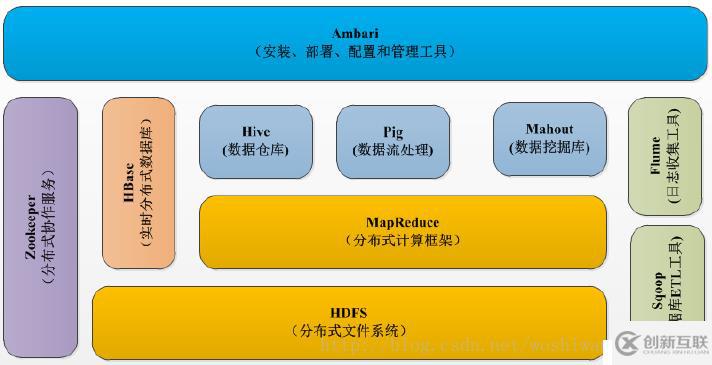

Hadoop的框架最核心的設(shè)計(jì)就是:HDFS和MapReduce。HDFS為海量的數(shù)據(jù)提供了存儲(chǔ),則MapReduce為海量的數(shù)據(jù)提供了計(jì)算。

創(chuàng)新互聯(lián)主營無為網(wǎng)站建設(shè)的網(wǎng)絡(luò)公司,主營網(wǎng)站建設(shè)方案,手機(jī)APP定制開發(fā),無為h5小程序設(shè)計(jì)搭建,無為網(wǎng)站營銷推廣歡迎無為等地區(qū)企業(yè)咨詢

1、HDFS 介紹

Hadoop實(shí)現(xiàn)了一個(gè)分布式文件系統(tǒng)(Hadoop Distributed File System),簡稱HDFS。

HDFS有高容錯(cuò)性的特點(diǎn),并且設(shè)計(jì)用來部署在低廉的(low-cost)硬件上;而且它提供高吞吐量(high throughput)來訪問應(yīng)用程序的數(shù)據(jù),適合那些有著超大數(shù)據(jù)集(large data set)的應(yīng)用程序。HDFS放寬了(relax)POSIX的要求,可以以流的形式訪問(streaming access)文件系統(tǒng)中的數(shù)據(jù)。

2、HDFS 組成

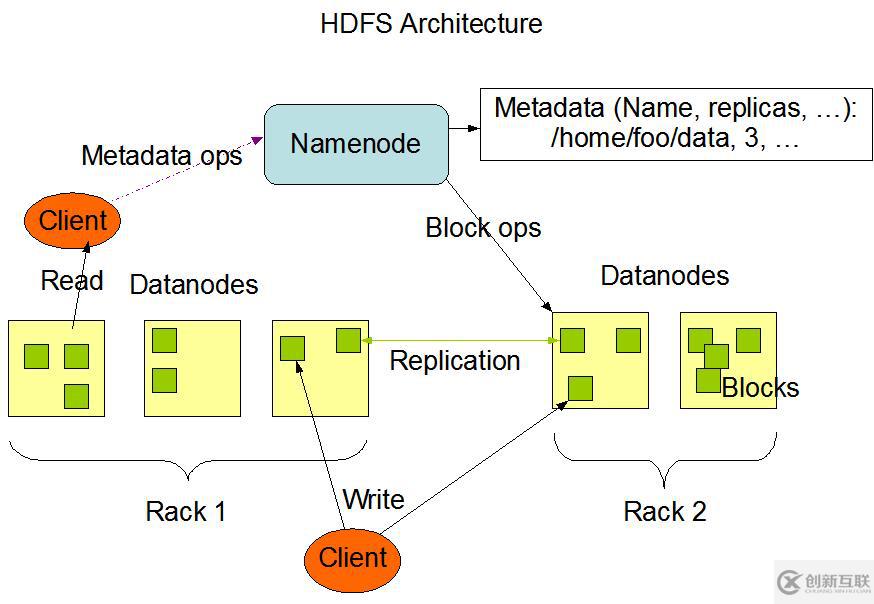

HDFS采用主從(Master/Slave)結(jié)構(gòu)模型,一個(gè)HDFS集群是由一個(gè)NameNode和若干個(gè)DataNode組成的。NameNode作為主服務(wù)器,管理文件系統(tǒng)命名空間和客戶端對(duì)文件的訪問操作。DataNode管理存儲(chǔ)的數(shù)據(jù)。HDFS支持文件形式的數(shù)據(jù)。

從內(nèi)部來看,文件被分成若干個(gè)數(shù)據(jù)塊,這若干個(gè)數(shù)據(jù)塊存放在一組DataNode上。NameNode執(zhí)行文件系統(tǒng)的命名空間,如打開、關(guān)閉、重命名文件或目錄等,也負(fù)責(zé)數(shù)據(jù)塊到具體DataNode的映射。DataNode負(fù)責(zé)處理文件系統(tǒng)客戶端的文件讀寫,并在NameNode的統(tǒng)一調(diào)度下進(jìn)行數(shù)據(jù)庫的創(chuàng)建、刪除和復(fù)制工作。NameNode是所有HDFS元數(shù)據(jù)的管理者,用戶數(shù)據(jù)永遠(yuǎn)不會(huì)經(jīng)過NameNode。

3、MapReduce 介紹

Hadoop MapReduce是google MapReduce 克隆版。

MapReduce是一種計(jì)算模型,用以進(jìn)行大數(shù)據(jù)量的計(jì)算。其中Map對(duì)數(shù)據(jù)集上的獨(dú)立元素進(jìn)行指定的操作,生成鍵-值對(duì)形式中間結(jié)果。Reduce則對(duì)中間結(jié)果中相同“鍵”的所有“值”進(jìn)行規(guī)約,以得到最終結(jié)果。MapReduce這樣的功能劃分,非常適合在大量計(jì)算機(jī)組成的分布式并行環(huán)境里進(jìn)行數(shù)據(jù)處理。

4、MapReduce 架構(gòu)

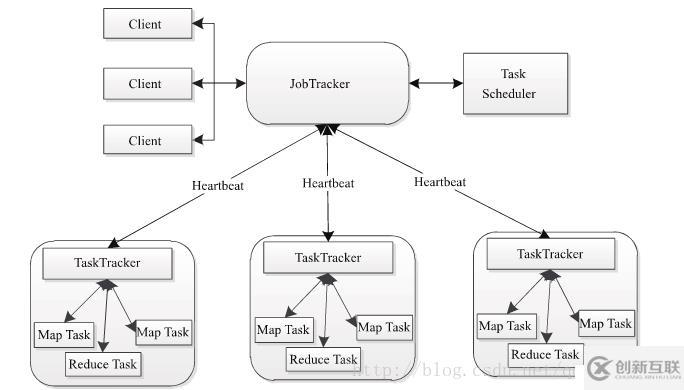

Hadoop MapReduce采用Master/Slave(M/S)架構(gòu),如下圖所示,主要包括以下組件:Client、JobTracker、TaskTracker和Task。

JobTracker

- JobTracker叫作業(yè)跟蹤器,運(yùn)行到主節(jié)點(diǎn)(Namenode)上的一個(gè)很重要的進(jìn)程,是MapReduce體系的調(diào)度器。用于處理作業(yè)(用戶提交的代碼)的后臺(tái)程序,決定有哪些文件參與作業(yè)的處理,然后把作業(yè)切割成為一個(gè)個(gè)的小task,并把它們分配到所需要的數(shù)據(jù)所在的子節(jié)點(diǎn)。

- Hadoop的原則就是就近運(yùn)行,數(shù)據(jù)和程序要在同一個(gè)物理節(jié)點(diǎn)里,數(shù)據(jù)在哪里,程序就跑去哪里運(yùn)行。這個(gè)工作是JobTracker做的,監(jiān)控task,還會(huì)重啟失敗的task(于不同的節(jié)點(diǎn)),每個(gè)集群只有唯一一個(gè)JobTracker,類似單點(diǎn)的NameNode,位于Master節(jié)點(diǎn)

TaskTracker

- TaskTracker叫任務(wù)跟蹤器,MapReduce體系的最后一個(gè)后臺(tái)進(jìn)程,位于每個(gè)slave節(jié)點(diǎn)上,與datanode結(jié)合(代碼與數(shù)據(jù)一起的原則),管理各自節(jié)點(diǎn)上的task(由jobtracker分配),

- 每個(gè)節(jié)點(diǎn)只有一個(gè)tasktracker,但一個(gè)tasktracker可以啟動(dòng)多個(gè)JVM,運(yùn)行Map Task和Reduce Task;并與JobTracker交互,匯報(bào)任務(wù)狀態(tài),

- Map Task:解析每條數(shù)據(jù)記錄,傳遞給用戶編寫的map(),并執(zhí)行,將輸出結(jié)果寫入本地磁盤(如果為map-only作業(yè),直接寫入HDFS)。

- Reducer Task:從Map Task的執(zhí)行結(jié)果中,遠(yuǎn)程讀取輸入數(shù)據(jù),對(duì)數(shù)據(jù)進(jìn)行排序,將數(shù)據(jù)按照分組傳遞給用戶編寫的reduce函數(shù)執(zhí)行。

二、Hadoop的安裝

1、下載安裝

# 下載安裝包

wget https://archive.apache.org/dist/hadoop/common/hadoop-2.7.3/hadoop-2.7.3.tar.gz

# 解壓安裝包

tar xf hadoop-2.7.3.tar.gz && mv hadoop-2.7.3 /usr/local/hadoop

# 創(chuàng)建目錄

mkdir -p /home/hadoop/{name,data,log,journal}2、配置 Hadoop 環(huán)境變量

創(chuàng)建文件/etc/profile.d/hadoop.sh。

# HADOOP ENV

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin使 Hadoop 環(huán)境變量生效。

source /etc/profile.d/hadoop.sh三、Hadoop 配置

1、配置 hadoop-env.sh

編輯文件/usr/local/hadoop/etc/hadoop/hadoop-env.sh,修改下面字段。

export JAVA_HOME=/usr/java/default

export HADOOP_HOME=/usr/local/hadoop2、配置 yarn-env.sh

編輯文件/usr/local/hadoop/etc/hadoop/yarn-env.sh,修改下面字段。

export JAVA_HOME=/usr/java/default3、配置 DN 白名單 slaves

編輯文件/usr/local/hadoop/etc/hadoop/slaves

datanode01

datanode02

datanode034、配置核心組件 core-site.xml

編輯文件/usr/local/hadoop/etc/hadoop/core-site.xml,修改為如下:

<configuration>

<!-- 指定hdfs的nameservice為cluster1 -->

<property>

<name>fs.default.name</name>

<value>hdfs://cluster1:9000</value>

</property>

<!-- 指定hadoop臨時(shí)目錄 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/data</value>

</property>

<!-- 指定zookeeper地址 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>zk01:2181,zk02:2181,zk03:2181</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

<!--設(shè)置hadoop的緩沖區(qū)大小為128MB-->

<property>

<name>io.file.buffer.size</name>

<value>131702</value>

</property>

</configuration>5、配置文件系統(tǒng) hdfs-site.xml

編輯文件/usr/local/hadoop/etc/hadoop/hdfs-site.xml,修改為如下:

<configuration>

<!--NameNode存儲(chǔ)元數(shù)據(jù)的目錄 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/hadoop/name</value>

</property>

<!--DataNode存儲(chǔ)數(shù)據(jù)塊的目錄-->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/hadoop/data</value>

</property>

<!--指定HDFS的副本數(shù)量-->

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<!--開啟HDFS的WEB管理界面功能-->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<!--指定HDFS的nameservice為cluster1,需要和core-site.xml中的保持一致 -->

<property>

<name>dfs.nameservices</name>

<value>cluster1</value>

</property>

</configuration>6、配置計(jì)算框架 mapred-site.xml

編輯文件/usr/local/hadoop/etc/hadoop/mapred-site.xml,修改為如下:

<configuration>

<!-- 指定mr框架為yarn方式 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- mapred做本地計(jì)算所使用的文件夾,可以配置多塊硬盤,逗號(hào)分隔 -->

<property>

<name>mapred.local.dir</name>

<value>/home/hadoop/data</value>

</property>

<!-- 使用管理員身份,指定作業(yè)map的堆大小-->

<property>

<name>mapreduce.admin.map.child.java.opts</name>

<value>-Xmx256m</value>

</property>

<!-- 使用管理員身份,指定作業(yè)reduce的堆大小-->

<property>

<name>mapreduce.admin.reduce.child.java.opts</name>

<value>-Xmx4096m</value>

</property>

<!-- 每個(gè)TT子進(jìn)程所使用的虛擬機(jī)內(nèi)存大小 -->

<property>

<name>mapred.child.java.opts</name>

<value>-Xmx512m</value>

</property>

<!-- 設(shè)置參數(shù)防止超時(shí),默認(rèn)600000ms,即600s-->

<property>

<name>mapred.task.timeout</name>

<value>1200000</value>

<final>true</final>

</property>

<!-- 禁止訪問NN的主機(jī)名稱列表,指定要?jiǎng)討B(tài)刪除的節(jié)點(diǎn)-->

<property>

<name>dfs.hosts.exclude</name>

<value>slaves.exclude</value>

</property>

<!-- 禁止連接JT的TT列表,節(jié)點(diǎn)摘除是很有作用 -->

<property>

<name>mapred.hosts.exclude</name>

<value>slaves.exclude</value>

</property>

</configuration>7、配置計(jì)算框架 yarn-site.xml

編輯文件/usr/local/hadoop/etc/hadoop/yarn-site.xml,修改為如下:

<configuration>

<!--RM的主機(jī)名 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>namenode01</value>

</property>

<!--RM對(duì)客戶端暴露的地址,客戶端通過該地址向RM提交應(yīng)用程序、殺死應(yīng)用程序等-->

<property>

<name>yarn.resourcemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8032</value>

</property>

<!--RM對(duì)AM暴露的訪問地址,AM通過該地址向RM申請(qǐng)資源、釋放資源等-->

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>${yarn.resourcemanager.hostname}:8030</value>

</property>

<!--RM對(duì)外暴露的web http地址,用戶可通過該地址在瀏覽器中查看集群信息-->

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

</property>

<!--RM對(duì)NM暴露地址,NM通過該地址向RM匯報(bào)心跳、領(lǐng)取任務(wù)等-->

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

</property>

<!--RM對(duì)管理員暴露的訪問地址,管理員通過該地址向RM發(fā)送管理命令等-->

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>${yarn.resourcemanager.hostname}:8033</value>

</property>

<!--單個(gè)容器可申請(qǐng)的最小與最大內(nèi)存,應(yīng)用在運(yùn)行申請(qǐng)內(nèi)存時(shí)不能超過最大值,小于最小值則分配最小值-->

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>983040</value>

</property>

<!--啟用的資源調(diào)度器主類。目前可用的有FIFO、Capacity Scheduler和Fair Scheduler-->

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

</property>

<!--RM對(duì)管理員暴露的訪問地址,管理員通過該地址向RM發(fā)送管理命令等-->

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>${yarn.resourcemanager.hostname}:8033</value>

</property>

<!--單個(gè)容器可申請(qǐng)的最小與最大內(nèi)存,應(yīng)用在運(yùn)行申請(qǐng)內(nèi)存時(shí)不能超過最大值,小于最小值則分配最小值-->

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>8182</value>

</property>

<!--啟用的資源調(diào)度器主類。目前可用的有FIFO、Capacity Scheduler和Fair Scheduler-->

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler</value>

</property>

<!--啟用日志聚集功能 -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler</value>

</property>

<!--單個(gè)容器可申請(qǐng)的最小/最大虛擬CPU個(gè)數(shù)。比如設(shè)置為1和4,則運(yùn)行MapRedce作業(yè)時(shí),每個(gè)Task最少可申請(qǐng)1個(gè)虛擬CPU,最多可申請(qǐng)4個(gè)虛擬CPU-->

<property>

<name>yarn.scheduler.maximum-allocation-vcores</name>

<value>512</value>

</property>

<!--單個(gè)可申請(qǐng)的最小/最大內(nèi)存資源量 -->

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value>

</property>

<!--啟用日志聚集功能 -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!-- 設(shè)置在HDFS上聚集的日志最多保存多長時(shí)間-->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

<!--NM運(yùn)行的Container,總的可用虛擬CPU個(gè)數(shù),默認(rèn)值8-->

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>12</value>

</property>

<!--NM運(yùn)行的Container,可以分配到的物理內(nèi)存,一旦設(shè)置,運(yùn)行過程中不可動(dòng)態(tài)修改,默認(rèn)8192MB-->

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>8192</value>

</property>

<!--是否啟動(dòng)一個(gè)線程檢查每個(gè)任務(wù)正使用的虛擬內(nèi)存量,如果任務(wù)超出分配值,則直接將其殺掉,默認(rèn)是true-->

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<!--是否啟動(dòng)一個(gè)線程檢查每個(gè)任務(wù)正使用的物理內(nèi)存量,如果任務(wù)超出分配值,則直接將其殺掉,默認(rèn)是true-->

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<!--每使用1MB物理內(nèi)存,最多可用的虛擬內(nèi)存數(shù),默認(rèn)值2.1-->

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property>

<!--一塊磁盤的最高使用率,當(dāng)一塊磁盤的使用率超過該值時(shí),則認(rèn)為該盤為壞盤,不再使用該盤,默認(rèn)是100,表示100%-->

<property>

<name>yarn.nodemanager.disk-health-checker.max-disk-utilization-per-disk-percentage</name>

<value>98.0</value>

</property>

<!--NM運(yùn)行的附屬服務(wù),需配置成mapreduce_shuffle,才可運(yùn)行MapReduce程序-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!--為了能夠運(yùn)行MapReduce程序,需要讓各個(gè)NodeManager在啟動(dòng)時(shí)加載shuffle server-->

<property>

<name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>8、將配置文件復(fù)制到其他服務(wù)節(jié)點(diǎn)

cd /usr/local/hadoop/etc/hadoop

scp * datanode01:/usr/local/hadoop/etc/hadoop

scp * datanode02:/usr/local/hadoop/etc/hadoop

scp * datanode03:/usr/local/hadoop/etc/hadoop

chown -R hadoop:hadoop /usr/local/hadoop

chmod 755 /usr/local/hadoop/etc/hadoop四、Hadoop 啟動(dòng)

1、格式化 HDFS(在NameNode01執(zhí)行)

hdfs namenode -format

hadoop-daemon.sh start namenode2、重啟 Hadoop(在NameNode01執(zhí)行)

stop-all.sh

start-all.sh五、檢查 Hadoop

1、檢查JPS進(jìn)程

[root@namenode01 ~]# jps

17419 NameNode

17780 ResourceManager

18152 Jps

[root@datanode01 ~]# jps

2227 DataNode

1292 QuorumPeerMain

2509 Jps

2334 NodeManager

[root@datanode02 ~]# jps

13940 QuorumPeerMain

18980 DataNode

19093 NodeManager

19743 Jps

[root@datanode03 ~]# jps

19238 DataNode

19350 NodeManager

14215 QuorumPeerMain

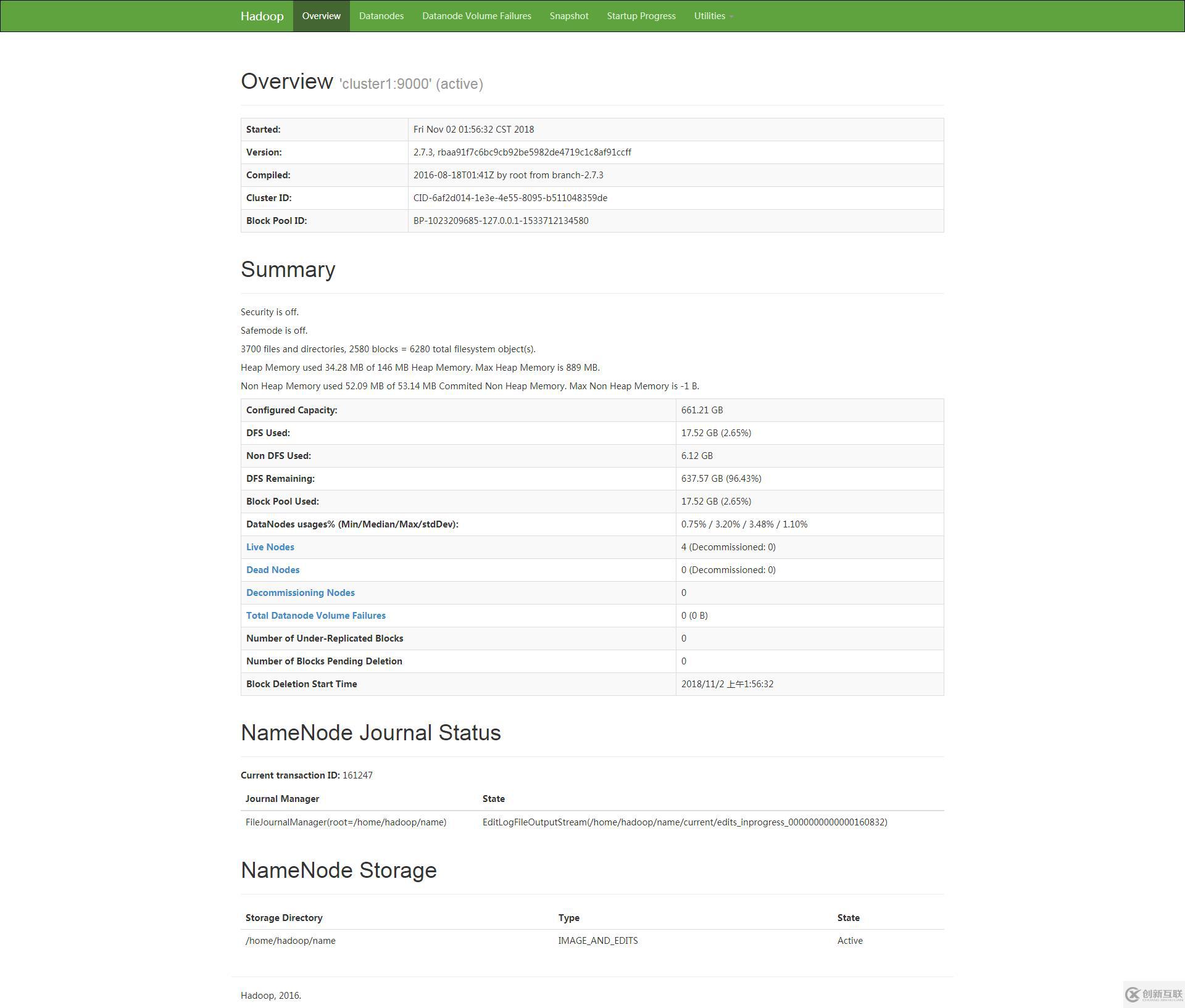

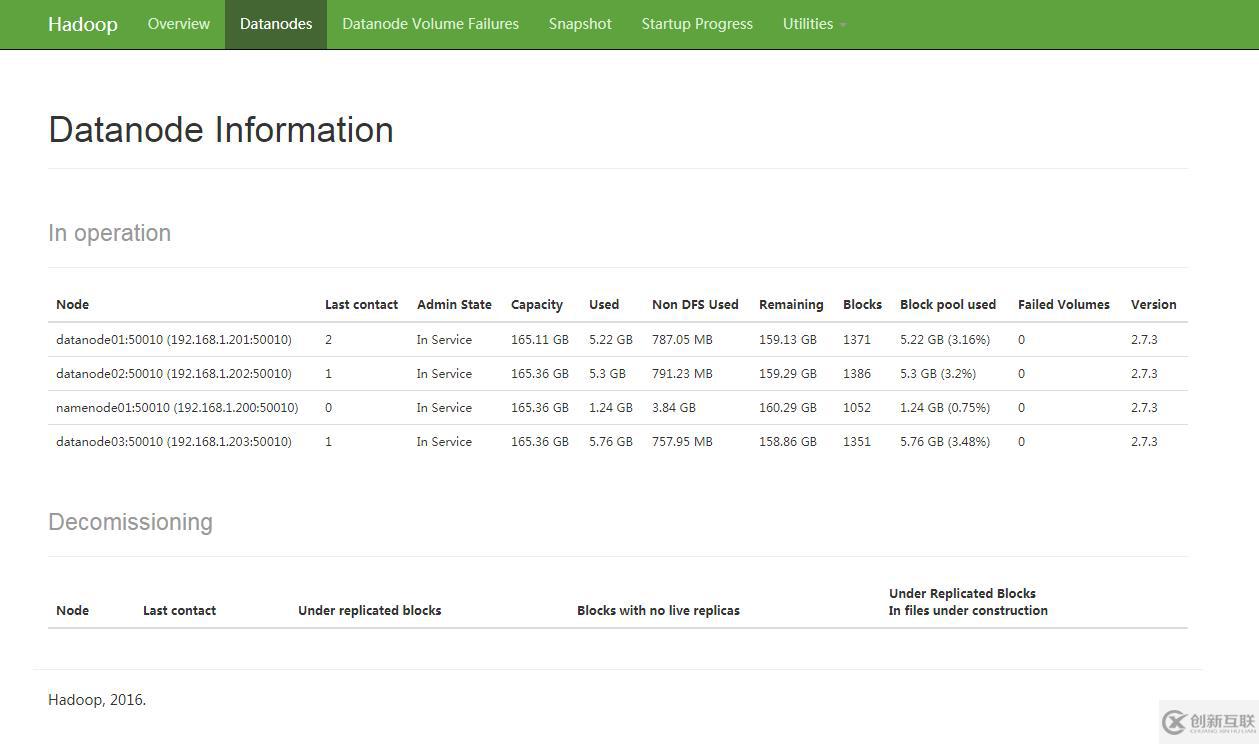

20014 Jps2、HDFS 的 WEB 界面

訪問 http://192.168.1.200:50070/

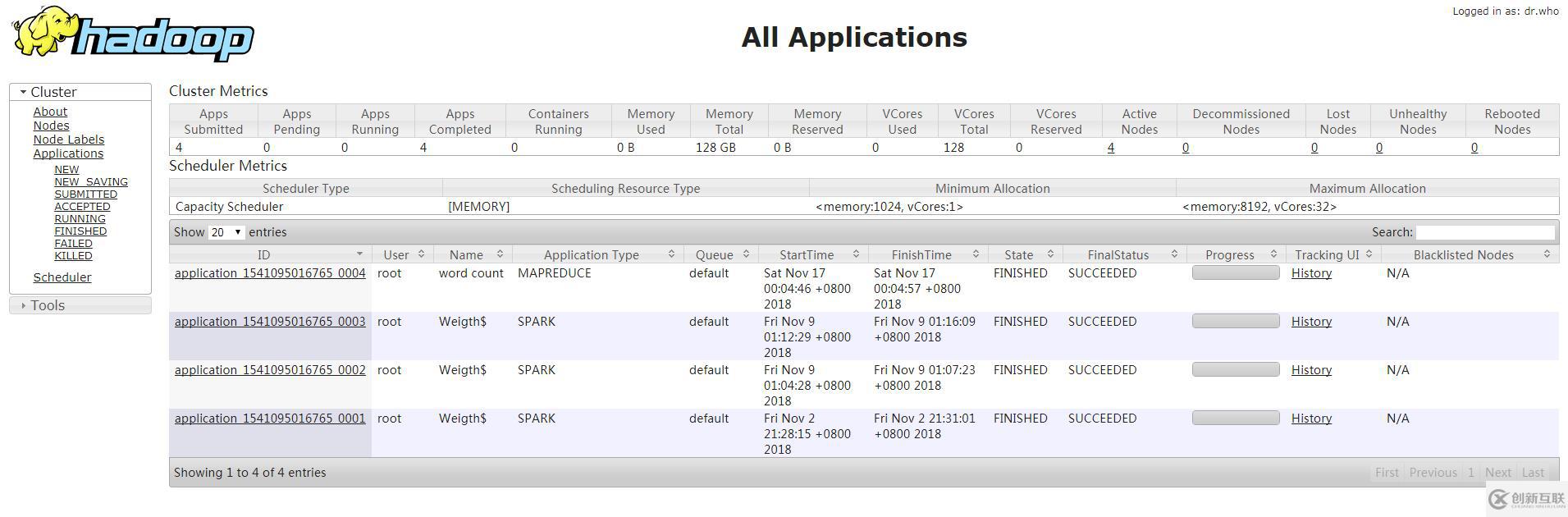

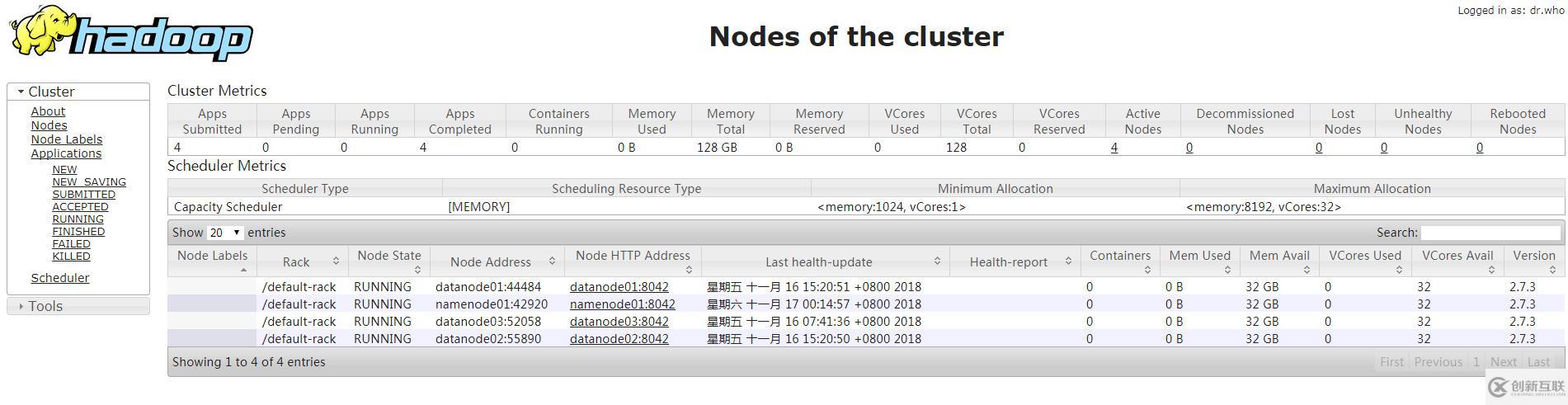

3、YARN 的 WEB 界面

訪問 http://192.168.1.200:8088/

六、MapReduce的WordCount驗(yàn)證

1、上傳需要處理的文件到 hdfs。

[root@namenode01 ~]# hadoop fs -put /root/anaconda-ks.cfg /anaconda-ks.cfg2、進(jìn)行 wordcount

[root@namenode01 ~]# cd /usr/local/hadoop/share/hadoop/mapreduce/

[root@namenode01 mapreduce]# hadoop jar hadoop-mapreduce-examples-2.7.3.jar wordcount /anaconda-ks.cfg /test

18/11/17 00:04:45 INFO client.RMProxy: Connecting to ResourceManager at namenode01/192.168.1.200:8032

18/11/17 00:04:45 INFO input.FileInputFormat: Total input paths to process : 1

18/11/17 00:04:45 INFO mapreduce.JobSubmitter: number of splits:1

18/11/17 00:04:45 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1541095016765_0004

18/11/17 00:04:46 INFO impl.YarnClientImpl: Submitted application application_1541095016765_0004

18/11/17 00:04:46 INFO mapreduce.Job: The url to track the job: http://namenode01:8088/proxy/application_1541095016765_0004/

18/11/17 00:04:46 INFO mapreduce.Job: Running job: job_1541095016765_0004

18/11/17 00:04:51 INFO mapreduce.Job: Job job_1541095016765_0004 running in uber mode : false

18/11/17 00:04:51 INFO mapreduce.Job: map 0% reduce 0%

18/11/17 00:04:55 INFO mapreduce.Job: map 100% reduce 0%

18/11/17 00:04:59 INFO mapreduce.Job: map 100% reduce 100%

18/11/17 00:04:59 INFO mapreduce.Job: Job job_1541095016765_0004 completed successfully

18/11/17 00:04:59 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=1222

FILE: Number of bytes written=241621

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1023

HDFS: Number of bytes written=941

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=1758

Total time spent by all reduces in occupied slots (ms)=2125

Total time spent by all map tasks (ms)=1758

Total time spent by all reduce tasks (ms)=2125

Total vcore-milliseconds taken by all map tasks=1758

Total vcore-milliseconds taken by all reduce tasks=2125

Total megabyte-milliseconds taken by all map tasks=1800192

Total megabyte-milliseconds taken by all reduce tasks=2176000

Map-Reduce Framework

Map input records=38

Map output records=90

Map output bytes=1274

Map output materialized bytes=1222

Input split bytes=101

Combine input records=90

Combine output records=69

Reduce input groups=69

Reduce shuffle bytes=1222

Reduce input records=69

Reduce output records=69

Spilled Records=138

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=99

CPU time spent (ms)=970

Physical memory (bytes) snapshot=473649152

Virtual memory (bytes) snapshot=4921606144

Total committed heap usage (bytes)=441450496

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=922

File Output Format Counters

Bytes Written=9413、查看結(jié)果

[root@namenode01 mapreduce]# hadoop fs -cat /test/part-r-00000

# 11

#version=DEVEL 1

$6$kRQ2y1nt/B6c6ETs$ITy0O/E9P5p0ePWlHJ7fRTqVrqGEQf7ZGi5IX2pCA7l25IdEThUNjxelq6wcD9SlSa1cGcqlJy2jjiV9/lMjg/ 1

%addon 1

%end 2

%packages 1

--all 1

--boot-drive=sda 1

--bootproto=dhcp 1

--device=enp1s0 1

--disable 1

--drives=sda 1

--enable 1

--enableshadow 1

--hostname=localhost.localdomain 1

--initlabel 1

--ipv6=auto 1

--isUtc 1

--iscrypted 1

--location=mbr 1

--onboot=off 1

--only-use=sda 1

--passalgo=sha512 1

--reserve-mb='auto' 1

--type=lvm 1

--vckeymap=cn 1

--xlayouts='cn' 1

@^minimal 1

@core 1

Agent 1

Asia/Shanghai 1

CDROM 1

Keyboard 1

Network 1

Partition 1

Root 1

Run 1

Setup 1

System 4

Use 2

auth 1

authorization 1

autopart 1

boot 1

bootloader 2

cdrom 1

clearing 1

clearpart 1

com_redhat_kdump 1

configuration 1

first 1

firstboot 1

graphical 2

ignoredisk 1

information 3

install 1

installation 1

keyboard 1

lang 1

language 1

layouts 1

media 1

network 2

on 1

password 1

rootpw 1

the 1

timezone 2

zh_CN.UTF-8 1七、Hadoop 的使用

查看fs幫助命令: hadoop fs -help

查看HDFS磁盤空間: hadoop fs -df -h

創(chuàng)建目錄: hadoop fs -mkdir

上傳本地文件: hadoop fs -put

查看文件: hadoop fs -ls

查看文件內(nèi)容: hadoop fs –cat

復(fù)制文件: hadoop fs -cp

下載HDFS文件到本地: hadoop fs -get

移動(dòng)文件: hadoop fs -mv

刪除文件: hadoop fs -rm -r -f

刪除文件夾: hadoop fs -rm –r

新聞名稱:Hadoop部署之Hadoop(三)

標(biāo)題鏈接:http://chinadenli.net/article44/gphiee.html

成都網(wǎng)站建設(shè)公司_創(chuàng)新互聯(lián),為您提供商城網(wǎng)站、搜索引擎優(yōu)化、建站公司、虛擬主機(jī)、網(wǎng)站策劃、網(wǎng)站導(dǎo)航

聲明:本網(wǎng)站發(fā)布的內(nèi)容(圖片、視頻和文字)以用戶投稿、用戶轉(zhuǎn)載內(nèi)容為主,如果涉及侵權(quán)請(qǐng)盡快告知,我們將會(huì)在第一時(shí)間刪除。文章觀點(diǎn)不代表本網(wǎng)站立場(chǎng),如需處理請(qǐng)聯(lián)系客服。電話:028-86922220;郵箱:631063699@qq.com。內(nèi)容未經(jīng)允許不得轉(zhuǎn)載,或轉(zhuǎn)載時(shí)需注明來源: 創(chuàng)新互聯(lián)

- 怎么做移動(dòng)手機(jī)網(wǎng)站? 2016-11-10

- 手機(jī)網(wǎng)站建設(shè)如何使用分屏網(wǎng)頁設(shè)計(jì)手法 2021-05-29

- 公司網(wǎng)站制作與手機(jī)APP的聯(lián)系 2016-08-21

- 網(wǎng)站設(shè)計(jì)中響應(yīng)式網(wǎng)站與手機(jī)網(wǎng)站有什么區(qū)別? 2016-10-28

- 手機(jī)網(wǎng)站建設(shè)需要注意的相關(guān)知識(shí) 2016-08-13

- 手機(jī)網(wǎng)站建設(shè)有哪些需要注意的? 2023-01-07

- 怎樣設(shè)計(jì)手機(jī)網(wǎng)站建設(shè)縮略圖? 2016-07-18

- 手機(jī)網(wǎng)站設(shè)計(jì)注意事項(xiàng):小心這十點(diǎn) 2013-05-14

- 手機(jī)頁面設(shè)計(jì)時(shí)應(yīng)注意的哪幾個(gè)方面? 2015-08-17

- 什么是手機(jī)網(wǎng)站,企業(yè)為什么要制作手機(jī)網(wǎng)站? 2016-11-03

- 定制手機(jī)網(wǎng)站建設(shè)時(shí)網(wǎng)站建設(shè)價(jià)格會(huì)受哪些關(guān)鍵問題影響? 2020-11-13

- 淺析:企業(yè)手機(jī)網(wǎng)站建設(shè)彈窗設(shè)計(jì)要嗎? 2022-05-24