hive與hbase數(shù)據(jù)交互的方法是什么

本篇內(nèi)容介紹了“hive與hbase數(shù)據(jù)交互的方法是什么”的有關(guān)知識(shí),在實(shí)際案例的操作過(guò)程中,不少人都會(huì)遇到這樣的困境,接下來(lái)就讓小編帶領(lǐng)大家學(xué)習(xí)一下如何處理這些情況吧!希望大家仔細(xì)閱讀,能夠?qū)W有所成!

創(chuàng)新互聯(lián)公司是一家專業(yè)提供孟連企業(yè)網(wǎng)站建設(shè),專注與成都網(wǎng)站制作、成都做網(wǎng)站、外貿(mào)營(yíng)銷網(wǎng)站建設(shè)、成都h5網(wǎng)站建設(shè)、小程序制作等業(yè)務(wù)。10年已為孟連眾多企業(yè)、政府機(jī)構(gòu)等服務(wù)。創(chuàng)新互聯(lián)專業(yè)網(wǎng)站建設(shè)公司優(yōu)惠進(jìn)行中。

HBase和Hive的集成原理

ApacheCN | apache中文網(wǎng)?

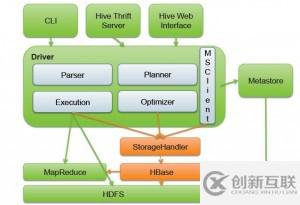

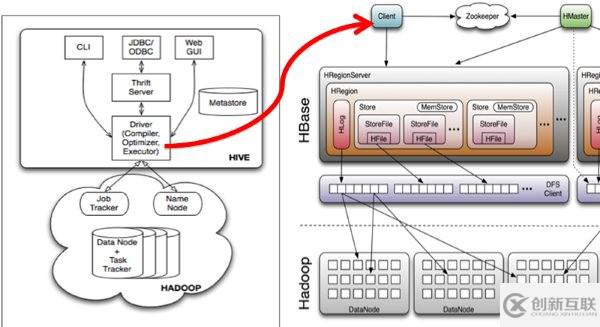

Hive和Hbase有各自不同的特征:hive是高延遲、結(jié)構(gòu)化和面向分析的,hbase是低延遲、非結(jié)構(gòu)化和面向編程的。Hive數(shù)據(jù)倉(cāng)庫(kù)在hadoop上是高延遲的。Hive集成Hbase就是為了使用hbase的一些特性。如下是hive和hbase的集成架構(gòu):

圖1 hive和hbase架構(gòu)圖

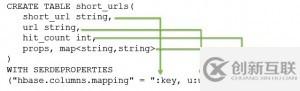

Hive集成HBase可以有效利用HBase數(shù)據(jù)庫(kù)的存儲(chǔ)特性,如行更新和列索引等。在集成的過(guò)程中注意維持HBase jar包的一致性。Hive集成HBase需要在Hive表和HBase表之間建立映射關(guān)系,也就是Hive表的列(columns)和列類型(column types)與HBase表的列族(column families)及列限定詞(column qualifiers)建立關(guān)聯(lián)。每一個(gè)在Hive表中的域都存在于HBase中,而在Hive表中不需要包含所有HBase中的列。HBase中的RowKey對(duì)應(yīng)到Hive中為選擇一個(gè)域使用:key來(lái)對(duì)應(yīng),列族(cf:)映射到Hive中的其它所有域,列為(cf:cq)。例如下圖2為Hive表映射到HBase表:

圖2 Hive表映射HBase表

基本介紹

Hive是基于Hadoop的一個(gè)數(shù)據(jù)倉(cāng)庫(kù)工具,可以將結(jié)構(gòu)化的數(shù)據(jù)文件映射為一張數(shù)據(jù)庫(kù)表,并提供完整的sql查詢功能,可以將sql語(yǔ)句轉(zhuǎn)換為MapReduce任務(wù)進(jìn)行運(yùn)行。 其優(yōu)點(diǎn)是學(xué)習(xí)成本低,可以通過(guò)類SQL語(yǔ)句快速實(shí)現(xiàn)簡(jiǎn)單的MapReduce統(tǒng)計(jì),不必開(kāi)發(fā)專門的MapReduce應(yīng)用,十分適合數(shù)據(jù)倉(cāng)庫(kù)的統(tǒng)計(jì)分析。

Hive與HBase的整合功能的實(shí)現(xiàn)是利用兩者本身對(duì)外的API接口互相進(jìn)行通信,相互通信主要是依靠hive_hbase-handler.jar工具類, 大致意思如圖所示:

軟件版本

使用的軟件版本:(沒(méi)有下載地址的百度中找就行)

jdk-6u24-linux-i586.bin

hive-0.9.0.tar.gz http://yun.baidu.com/share/link?shareid=2138315647&uk=1614030671

hbase-0.94.14.tar.gz http://mirror.bit.edu.cn/apache/hbase/hbase-0.94.14/

hadoop-1.1.2.tar.gz http://pan.baidu.com/s/1mgmKfsG

安裝位置

安裝目錄:/usr/local/ (記得解壓后重命名一下哦)

Hbase的安裝路徑為:/usr/local/hbase

Hive的安裝路徑為:/usr/local/hive

整合步驟

整合hive與hbase的過(guò)程如下:

1.在 /usr/local/hbase-0.90.4下:

hbase-0.94.14.jar,hbase-0.94.14-tests.jar 與lib/zookeeper-3.4.5.jar拷貝到/usr/local /hive/lib文件夾下面

注意:

如果hive/lib下已經(jīng)存在這兩個(gè)文件的其他版本(例如zookeeper-3.3.1.jar)

建議刪除后使用hbase下的相關(guān)版本

還需要

protobuf-java-2.4.0a.jar拷貝到/usr/local/hive/lib和/usr/local/hadoop/lib下

2.修改hive-site.xml文件

在/usr/local/hive/conf下目錄下,在hive-site.xml最底部添加如下內(nèi)容:

(跳轉(zhuǎn)到最下面的linux命令:按住Esc鍵 + 冒號(hào) + $ 然后回車) <property> <name>hive.querylog.location</name> <value>/usr/local/hive/logs</value> </property> <property> <name>hive.aux.jars.path</name>

注意:如果不存在則自行創(chuàng)建,或者把文件改名后使用。拷貝到所有節(jié)點(diǎn)包括的下。拷貝下的文件到所有節(jié)點(diǎn)包括的下。

注意,如果3,4兩步跳過(guò)的話,運(yùn)行hive時(shí)很可能出現(xiàn)如下錯(cuò)誤:

org.apache.hadoop.hbase.ZooKeeperConnectionException: HBase is able to connect to ZooKeeper but the connection closes immediately.

This could be a sign that the server has too many connections (30 is the default). Consider inspecting your ZK server logs for that error and

then make sure you are reusing HBaseConfiguration as often as you can. See HTable's javadoc for more information. at org.apache.hadoop.

hbase.zookeeper.ZooKeeperWatcher.

5 啟動(dòng)hive (測(cè)試成功)

單節(jié)點(diǎn)啟動(dòng)

bin/hive -hiveconf hbase.master=master:60000

集群?jiǎn)?dòng) (這個(gè)我沒(méi)測(cè)試)

bin/hive -hiveconf hbase.zookeeper.quorum=node1,node2,node3 (所有的zookeeper節(jié)點(diǎn))

如果hive-site.xml文件中沒(méi)有配置hive.aux.jars.path,則可以按照如下方式啟動(dòng)。

hive --auxpath /opt/mapr/hive/hive-0.7.1/lib/hive-hbase-handler-0.7.1.jar,/opt/mapr/hive/hive-0.7.1/lib/hbase-0.90.4.jar,/opt/mapr/hive/hive-0.7.1/lib/zookeeper-3.3.2.jar -hiveconf hbase.master=localhost:60000

經(jīng)測(cè)試修改hive的配置文件hive-site.xml

<property>

<name>hive.zookeeper.quorum</name>

<value>node1,node2,node3</value>

<description>The list of zookeeper servers to talk to. This is only needed for read/write locks.</description>

</property>

不用增加參數(shù)啟動(dòng)hive就可以聯(lián)合hbase

測(cè)試hive到hbase中

啟動(dòng)后進(jìn)行測(cè)試(重啟一下集群)

1. 用hive創(chuàng)建hbase能識(shí)別的表

語(yǔ)句如下:

create table hbase_table_1(key int, value string)

stored by 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

with serdeproperties ("hbase.columns.mapping">:key,cf1:val")

tblproperties ("hbase.table.name" = "xyz");

此刻你進(jìn)入hbase shell中發(fā)現(xiàn)多了一張表 ‘xyz’

(可以先跳過(guò)這句話:hbase.table.name 定義在hbase的table名稱,

多列時(shí):data:1,data:2;多列族時(shí):data1:1,data2:1;)

hbase.columns.mapping 定義在hbase的列族,里面的:key 是固定值而且要保證在表pokes中的foo字段是唯一值

創(chuàng)建有分區(qū)的表

create table hbase_table_1(key int, value string)

partitioned by (day string)

stored by 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

with serdeproperties ("hbase.columns.mapping" = ":key,cf1:val")

tblproperties ("hbase.table.name" = "xyz");

不支持表的修改

會(huì)提示不能修改非本地表。

hive> ALTER TABLE hbase_table_1 ADD PARTITION (day = '2012-09-22');

FAILED: Error in metadata: Cannot use ALTER TABLE on a non-native table FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask

2. 導(dǎo)入數(shù)據(jù)到關(guān)聯(lián)hbase的表中去

1.在hive中新建一張中間表

create table pokes(foo int,bar string)

row format delimited fields terminated by ',';

批量導(dǎo)入數(shù)據(jù)

load data local inpath '/home/1.txt' overwrite into table pokes;

1.txt文件的內(nèi)容為

1,hello

2,pear

3,world

使用sql導(dǎo)入hbase_table_1

set hive.hbase.bulk=true;

2.插入數(shù)據(jù)到hbase表中去

insert overwrite table hbase_table_1

select * from pokes;

導(dǎo)入有分區(qū)的表

insert overwrite table hbase_table_1 partition (day='2012-01-01')

select * from pokes;

3.查看關(guān)聯(lián)hbase的那張表

hive> select * from hbase_table_1;

OK

1 hello

2 pear

3 world

(注:與hbase整合的有分區(qū)的表存在個(gè)問(wèn)題 select * from table查詢不到數(shù)據(jù),select key,value from table可以查到數(shù)據(jù))

4.登錄hbase查看那張表的數(shù)據(jù)

hbase shell

hbase(main):002:0> describe 'xyz'

DESCRIPTION ENABLED {NAME => 'xyz', FAMILIES => [{NAME => 'cf1', BLOOMFILTER => 'NONE', REPLICATION_S true

COPE => '0', COMPRESSION => 'NONE', VERSIONS => '3', TTL => '2147483647', BLOCKSI

ZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}]}

1 row(s) in 0.0830 seconds

hbase(main):003:0> scan 'xyz'

ROW COLUMN+CELL

1 column=cf1:val, timestamp=1331002501432, value=hello

2 column=cf1:val, timestamp=1331002501432, value=pear

3 column=cf1:val, timestamp=1331002501432, value=world

這時(shí)在Hbase中可以看到剛才在hive中插入的數(shù)據(jù)了。

測(cè)試hbase到hive中

1.在hbase中創(chuàng)建表

create 'test1','a','b','c'

put 'test1','1','a','qqq'

put 'test1','1','b','aaa'

put 'test1','1','c','bbb'

put 'test1','2','a','qqq'

put 'test1','2','c','bbb'

2.把hbase中的表關(guān)聯(lián)到hive中

對(duì)于在hbase已經(jīng)存在的表,在hive中使用CREATE EXTERNAL TABLE來(lái)建立

例如hbase中的表名稱為test1,字段為 a: , b: ,c: 在hive中建表語(yǔ)句為

create external table hive_test

(key int,gid map<string,string>,sid map<string,string>,uid map<string,string>)

stored by 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

with serdeproperties ("hbase.columns.mapping" ="a:,b:,c:")

tblproperties ("hbase.table.name" = "test1");

2.檢查test1中的數(shù)據(jù)

在hive中建立好表后,查詢hbase中test1表內(nèi)容

select * from hive_test;

OK

1 {"":"qqq"} {"":"aaa"} {"":"bbb"}

2 {"":"qqq"} {} {"":"bbb"}

查詢gid字段中value值的方法為

select gid[''] from hive_test;

得到查詢結(jié)果

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_201203052222_0017, Tracking URL = http://localhost:50030/jobdetails.jsp?jobid=job_201203052222_0017

Kill Command = /opt/mapr/hadoop/hadoop-0.20.2/bin/../bin/hadoop job -Dmapred.job.tracker=maprfs:/// -kill job_201203052222_0017

2012-03-06 14:38:29,141 Stage-1 map = 0%, reduce = 0%

2012-03-06 14:38:33,171 Stage-1 map = 100%, reduce = 100%

Ended Job = job_201203052222_0017

OK

qqq

qqq

如果hbase表test1中的字段為user:gid,user:sid,info:uid,info:level,在hive中建表語(yǔ)句為

create external table hive_test

(key int,user map<string,string>,info map<string,string>)

stored by 'org.apache.hadoop.hive.hbase.hbasestoragehandler'

with serdeproperties ("hbase.columns.mapping" ="user:,info:")

tblproperties ("hbase.table.name" = "test1");

查詢hbase表的方法為

select user['gid'] from hive_test;

注:hive連接hbase優(yōu)化,將HADOOP_HOME/conf中的hbase-site.xml文件中增加配置

<property>

<name>hbase.client.scanner.caching</name>

<value>10000</value>

</property>

或者在執(zhí)行hive語(yǔ)句之前執(zhí)行hive>set hbase.client.scanner.caching=10000;

報(bào)錯(cuò):

Hive報(bào)錯(cuò)

1.NoClassDefFoundError

Could not initialize class java.lang.NoClassDefFoundError: Could not initialize class org.apache.hadoop.hbase.io.HbaseObjectWritable

將protobuf-***.jar添加到j(luò)ars路徑

//$HIVE_HOME/conf/hive-site.xml

<property>

<name>hive.aux.jars.path</name>

<value>file:///data/hadoop/hive-0.10.0/lib/hive-hbase-handler-0.10.0.jar,file:///data/hadoop/hive-0.10.0/lib/hbase-0.94.8.jar,file:///data/hadoop/hive-0.10.0/lib/zookeeper-3.4.5.jar,file:///data/hadoop/hive-0.10.0/lib/guava-r09.jar,file:///data/hadoop/hive-0.10.0/lib/hive-contrib-0.10.0.jar,file:///data/hadoop/hive-0.10.0/lib/protobuf-java-2.4.0a.jar</value>

</property>

Hbase 報(bào)錯(cuò)

:java.lang.NoClassDefFoundError: com/google/protobuf/Message

編個(gè)Hbase程序,系統(tǒng)提示錯(cuò)誤,java.lang.NoClassDefFoundError: com/google/protobuf/Message

找了半天,從這個(gè)地方發(fā)現(xiàn)了些東西:http://abloz.com/2012/06/15/hive-execution-hbase-create-the-table-can-not-find-protobuf.html

內(nèi)容如下:

hadoop:1.0.3

hive:0.9.0

hbase:0.94.0

protobuf:$HBASE_HOME/lib/protobuf-java-2.4.0a.jar

可以看到,0.9.0的hive里面自帶的hbase的jar是0.92版本的。

[zhouhh@Hadoop48 ~]$ hive –auxpath $HIVE_HOME/lib/hive-hbase-handler-0.9.0.jar,$HIVE_HOME/lib/hbase-0.92.0.jar,$HIVE_HOME/lib/zookeeper-3.3.4.jar,$HIVE_HOME/lib/guava-r09.jar,$HBASE_HOME/lib/protobuf-java-2.4.0a.jar

hive> CREATE TABLE hbase_table_1(key int, value string)

> STORED BY ‘org.apache.hadoop.hive.hbase.HBaseStorageHandler’

> WITH SERDEPROPERTIES (“hbase.columns.mapping” = “:key,cf1:val”)

> TBLPROPERTIES (“hbase.table.name” = “xyz”);

java.lang.NoClassDefFoundError: com/google/protobuf/Message

at org.apache.hadoop.hbase.io.HbaseObjectWritable.(HbaseObjectWritable.java

…

Caused by: java.lang.ClassNotFoundException: com.google.protobuf.Message

解決辦法:

將$HBASE_HOME/lib/protobuf-java-2.4.0a.jar 拷貝到 $HIVE_HOME/lib/.

[zhouhh@Hadoop48 ~]$ cp /home/zhouhh/hbase-0.94.0/lib/protobuf-java-2.4.0a.jar $HIVE_HOME/lib/.

hive> CREATE TABLE hbase_table_1(key int, value string)

> STORED BY ‘org.apache.hadoop.hive.hbase.HBaseStorageHandler’

> WITH SERDEPROPERTIES (“hbase.columns.mapping” = “:key,cf1:val”)

> TBLPROPERTIES (“hbase.table.name” = “xyz”);

OK

Time taken: 10.492 seconds

hbase(main):002:0> list ‘xyz’

TABLE

xyz

1 row(s) in 0.0640 seconds

在引用 的jar包中包含protobuf-java-2.4.0a.jar即可。

測(cè)試腳本

bin/hive -hiveconf hbase.master=master:60000

hive --auxpath /usr/local/hive/lib/hive-hbase-handler-0.9.0.jar,/usr/local/hive/lib/hbase-0.94.7-security.jar,/usr/local/hive/lib/zookeeper-3.4.5.jar -hiveconf hbase.master=localhost:60000

<property>

<name>hive.aux.jars.path</name>

<value>file:///usr/local/hive/lib/hive-hbase-handler-0.9.0.jar,file:///usr/local/hive/lib/hbase-0.94.7-security.jar,file:///usr/local/hive/lib/zookeeper-3.4.5.jar</value>

</property>

1 nana

2 hehe

3 xixi

hadoop dfsadmin -safemode leave

/home/hadoop

create table hbase_table_1(key int, value string)

stored by 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

with serdeproperties ("hbase.columns.mapping" = ":key,cf1:val")

tblproperties ("hbase.table.name" = "xyz");

drop table pokes;

create table pokes

(id int,name string)

row format delimited fields terminated by ' '

stored as textfile;

load data local inpath '/home/hadoop/kv1.txt' overwrite into table pokes;

insert into table hbase_table_1

select * from pokes;

create external table hive_test

(key int,gid map<string,string>,sid map<string,string>,uid map<string,string>)

stored by 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

with serdeproperties ("hbase.columns.mapping" ="a:,b:,c:")

tblproperties ("hbase.table.name" = "test1");

“hive與hbase數(shù)據(jù)交互的方法是什么”的內(nèi)容就介紹到這里了,感謝大家的閱讀。如果想了解更多行業(yè)相關(guān)的知識(shí)可以關(guān)注創(chuàng)新互聯(lián)網(wǎng)站,小編將為大家輸出更多高質(zhì)量的實(shí)用文章!

本文題目:hive與hbase數(shù)據(jù)交互的方法是什么

網(wǎng)站網(wǎng)址:http://chinadenli.net/article14/ipsdde.html

成都網(wǎng)站建設(shè)公司_創(chuàng)新互聯(lián),為您提供服務(wù)器托管、網(wǎng)站排名、虛擬主機(jī)、網(wǎng)站策劃、域名注冊(cè)、

聲明:本網(wǎng)站發(fā)布的內(nèi)容(圖片、視頻和文字)以用戶投稿、用戶轉(zhuǎn)載內(nèi)容為主,如果涉及侵權(quán)請(qǐng)盡快告知,我們將會(huì)在第一時(shí)間刪除。文章觀點(diǎn)不代表本網(wǎng)站立場(chǎng),如需處理請(qǐng)聯(lián)系客服。電話:028-86922220;郵箱:631063699@qq.com。內(nèi)容未經(jīng)允許不得轉(zhuǎn)載,或轉(zhuǎn)載時(shí)需注明來(lái)源: 創(chuàng)新互聯(lián)

- 常州建站公司:面對(duì)不同的報(bào)價(jià)該如何選擇網(wǎng)站制作公司? 2021-08-07

- 成都建站公司要怎樣選擇? 2022-06-05

- 成都建站公司 教您怎樣才能做好百度優(yōu)化 2016-11-16

- 除去網(wǎng)站建設(shè)的價(jià)格 企業(yè)做網(wǎng)站時(shí)更應(yīng)該充分了解建站公司 2022-05-18

- 企業(yè)網(wǎng)站建設(shè)的速度快與慢不是由建站公司單一決定的 2022-05-22

- 網(wǎng)站建設(shè)的過(guò)程中建站公司需要客戶提供哪些資料 2015-12-04

- 專業(yè)建站公司為何做不好關(guān)鍵詞排名? 2023-04-21

- 建站公司費(fèi)用詳解 2016-10-18

- 北京營(yíng)銷型網(wǎng)站建設(shè)哪家建站公司好 2022-08-24

- 選擇成都建站公司不要只看價(jià)格便宜 2016-10-22

- 企業(yè)網(wǎng)站建設(shè)找建站公司做還是自己招人做好呢? 2020-10-30

- 企業(yè)建站公司:如何做好企業(yè)手機(jī)端網(wǎng)站開(kāi)發(fā) 2021-05-03